Person Search with Natural Language Description

Paper

Dataset is available upon request (sli [at] ee.cuhk.edu.hk).

Abstract

Searching persons in large-scale image databases with different types of queries is a challenging problem, but has important applications in intelligent surveillance. Existing methods mainly focused on searching persons with image-based or attribute-based queries. We argue that such queries have major limitations for practical applications. In this paper, we propose to solve a novel problem on person search with natural language description. Given one or several descriptive sentences of a person, the algorithm is required to rank all images in the database according to the sentence-image affinities, and retrieve the most related images to the description. Since there is no existing person dataset supporting this new research direction, we propose a large-scale person description dataset with language annotations on detailed information of person images from various sources. An innovative Recurrent Neural Network with Gated Neural Attention mechanism (GNA-RNN) is proposed to solve the new person search problem. Extensive experiments and comparisons with a wide range of possible solutions and baselines demonstrate the effectiveness of our proposed GNA-RNN framework.

Contribution Highlights

- We propose to solve a new problem on searching persons with natural language. This problem setting is more practical for real-world applications and would potentially attract great attention in the future. To support the new research direction, a large-scale person appearance description dataset with rich language annotations is proposed. A series of user studies are conducted to investigate the expressive power of natural languages from human's perspective, which provide important guidance on designing deep networks and collecting training data.

- We propose a novel Recurrent Neural Network with Gated Neural Attention mechanism (GNA-RNN) for solving the problem. The Gated Neural Attention mechanism learns attention for individual visual neurons based on different word inputs, and effectively captures the relations between words and visual neurons for learning better feature representations.

- Since the problem of person search with natural language is new, we investigated a wide range of plausible solutions based on different state-of-the-art vision and language frameworks, including image captioning, visual QA, and visual-semantic embedding, to determine the optimal framework for solving the new problem.

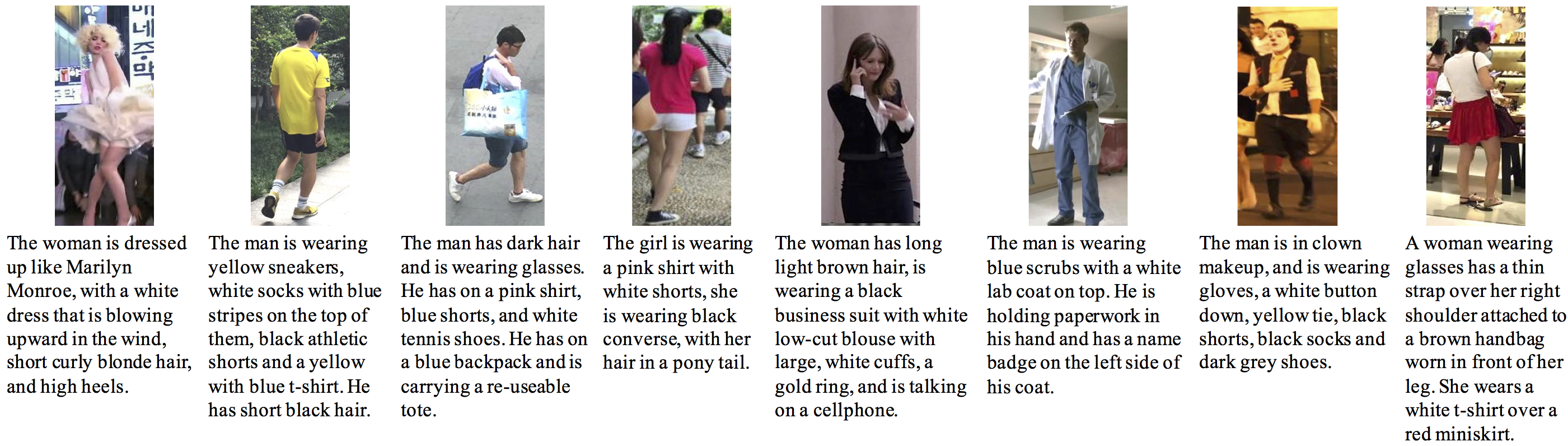

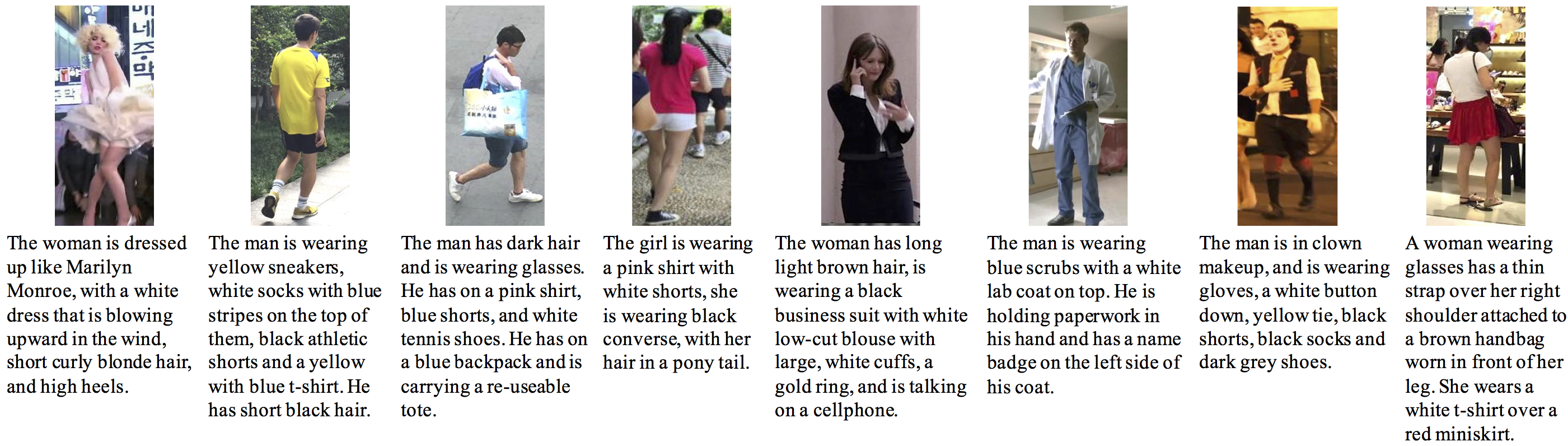

Large-scale benchmark for person search with natural language description

The dataset consists of rich and accurate annotations with open word descriptions. There were 1,993 unique workers involved in the labeling task, and all of them have greater-than 95% approving rates.

Vocabulary, phrase sizes, and sentence length are important indicators on the capacity our language dataset. There are a total of 1,893,118 words and 9,408 unique words in our dataset.

The longest sentence has 96 words and the average word length is 23.5. Most sentences have 20 to 40 words in length.

1) Train and Test

The training set consists of 11,003 persons, 34,054 images and 68,108 sentence descriptions. The validation set and test set contain 3,078 and 3,074 images, respectively, and both of them have 1,000 persons.

2) User Study

Based on our new language description dataset, we conduct extensive user studies to investigate 1) the expressive power of language descriptions compared with that of attributes, 2) the expressive power in terms of the number of sentences and sentence length, and 3) the expressive power of different word types. The studies provide us insights for understanding the new problem and guidance on designing our neural networks.

We design manual experiments to investigate the expressive power of language descriptions in terms of the number of sentences for each image and sentence length. Given the sentences for each image, we ask crowd workers from AMT to manually retrieve the corresponding images from pools of 20 images.

Left figure shows the average top-1 accuracy, top-5 accuracy, and average used time of manual person search using language descriptions with different number of sentences and different sentence lengths.

(a) (b)

Top-1 accuracy, top-5 accuracy, and average used time of manual person search results using the original sentences, and sentences with nouns, or adjectives, or verbs masked out. Left table demonstrates that the nouns provide most information followed by the adjectives, while the verbs carry least information.

Method and Performance

1) GNA-RNN

To address the problem of person search with language descriptions, we propose a novel deep neural network with Gated Neural Attention (GNA-RNN) to capture word-image relations and estimate the affinity between a sentence and a person image.

The overall structure of the GNA-RNN is shown in Figure 2, which consists of a visual sub-network (right branch) and a language sub-network (left branch). The visual sub-network generates a series of visual neurons, each of which encodes if certain human attributes or appearance patterns (e.g., white scarf) exist in the given person image. The language sub-network is a Recurrent Neural Network (RNN) with Long Short-Term Memory (LSTM) units, which takes words and images as input. At each word, it outputs neuron-level attention and word-level gate to weight the visual neurons from the visual sub-network. The neuron-level attention determines which visual neurons should be paid more attention to according to the input word. The word-level gate weight the importance of different words.

All neurons' responses are weighted by both the neuron-level attentions and word-level gates, and are then aggregated to generate the final affinity. By training such network in an end-to-end manner, the Gated Neural Attention mechanism is able to effectively capture the optimal word-image relations.

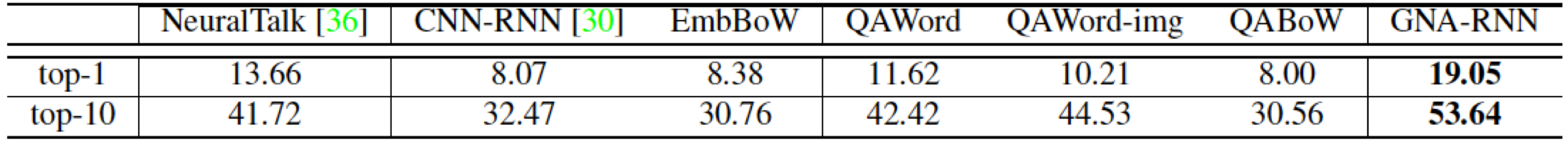

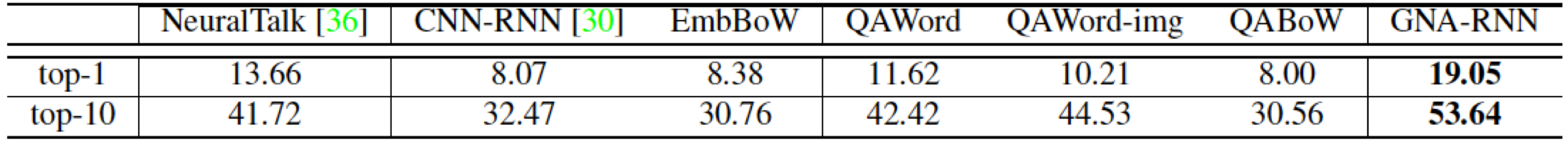

2) Evaluation

Quantitative results of the proposed GNA-RNN and compared methods on the proposed dataset.

Quantitative results of GNA-RNN on the proposed dataset without world-level gates or without neuron-level attentions.

3) Person Search Results

Reference

If you want to use our dataset or codes, please feel free to contact us (sli [at] ee.cuhk.edu.hk).

Please reference the following paper for more details:

- S. Li, T. Xiao, H. Li, B. Zhou, D. Yue and X. Wang. Person Search with Natural Language Description, arXiv

pdf